The muon anomaly, first observed in 2001 in an experiment in Brookhaven, USA, persisted. This slight discrepancy between the calculated and experimentally determined value of the muon magnetic moment was detected at that time with a significance of about 3.7 sigma. This corresponds to a 99.98% significance level, or 1 in 4,500 probability that a random wiggle in the data caused the signal.

© Jörg Steinmetz

Sabine Hussainfelder is a theoretical physicist and devoted to quantum gravity and physics outside the standard model. She is currently a Research Fellow at the Frankfurt Institute for Advanced Studies. In 2018 her book “The Ugly Universe” was published.

Serendipity is less likely

With the announcement of the latest results from Fermilab two weeks ago, the importance increased to 4.2 sigma. Fermilab near Chicago in the US has now reached a significance level of about 99.997%, or a 1 in 40,000 probability that the observed deviation is a coincidence. The new Fermilab metering itself has significance only 3.3 sigma, but since it reproduces previous results from Brookhaven, a higher level of safety is achieved. However, the deviation is less than the detection criterion of 5 sigma, which is common in particle physics.

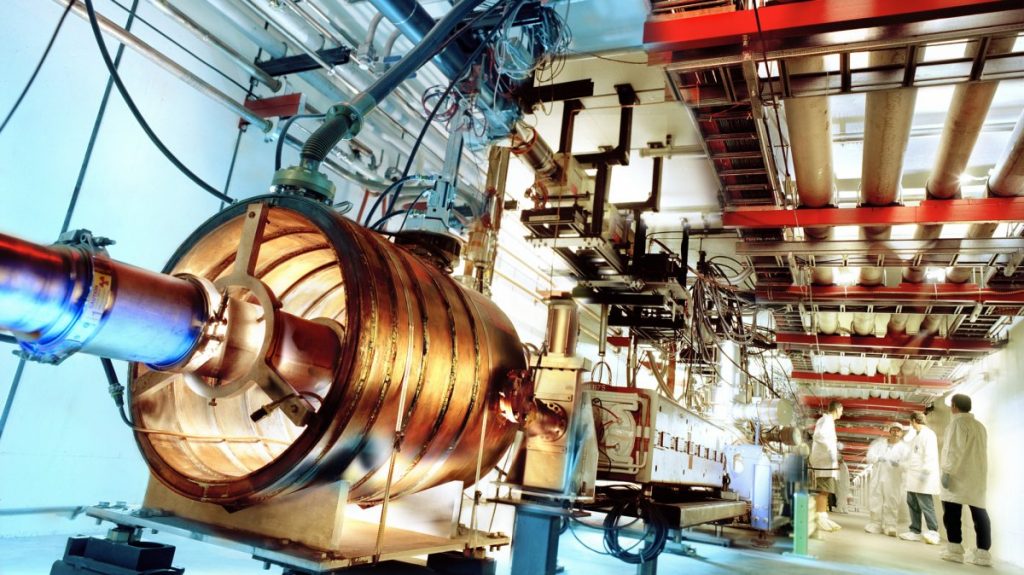

Physicists have been waiting anxiously for the Fermilab result, as they might question the Standard Model of particle physics. This model, which is about 50 years old, is a previously known set of building blocks of matter and currently contains 25 particles. However, most of them are unstable and thus do not appear in the substance that usually surrounds us. However, these unstable particles are created by high-energy particle collisions. This is what happens in nature when cosmic rays hit the upper atmosphere. However, unstable particles can also be created in the laboratory using particle accelerators. This was done for the Fermilab experiment in order to measure the magnetic moment of the muons generated in this way.

The first muons were discovered in 1936. They were among the first unstable particles known to physicists. The muon is a heavier version of the electron, and it is also electrically charged and has a life span of about 2 microseconds. That’s a long time for particle physicists, which is why muons are suitable for precise measurements. The magnetic moment of the particle determines how fast the axis of rotation will rotate around the magnetic field lines. To measure this, physicists created muons and then used powerful magnets to make them spin in a ring about 15 meters in diameter. The particles finally decay, and the magnetic moment can then be inferred from the distribution of the decay products.

More particles? More dimensions?

Usually the result is reported as the g-2 value, where g is the magnetic moment. This is because the value is close to 2, but physicists are primarily interested in the quantitative contributions found in the deviations from 2. These small quantitative contributions come from the fluctuations of the vacuum containing all the particles in hypothetical form. These virtual particles only appear for a while before they are gone again. That is, if there are more particles than those in the Standard Model, then these particles must contribute to muon g-2 – which is why the new measurement is so interesting. Deviating from the Standard Model prediction could mean that there are more particles than currently known particles, or that different new physics are available, for example additional spatial dimensions.

What’s Missing: In the fast-paced world of technology, there is often time to rearrange tons of news and backgrounds. On the weekend, we want to take it, follow the sideways paths away from the stream, try different perspectives and make the nuances audible.

-

More on the “Missing link” feature section

What should one think about the 4.2 sigma discrepancy between predicting the standard model and the new measurement? First, it helps to remember why particle physicists are calling the 5 sigma standard for new discoveries in the first place. It’s not that particle physics itself is more accurate than other fields of science, or that particle physicists make much better experiments. This is mainly because particle physicists have a lot of data. The more data you have, the more likely you are to find fluctuations that appear to be a signal. Particle physicists began using the 5 sigma standard in the mid-1990s simply to save themselves from the embarrassment that many supposed discoveries would later reveal as statistical fluctuations.

But of course 5 sigma is quite an arbitrary term. Particle physicists also often like to discuss anomalies that fall far short of this limit. And there have been a few of these anomalies over the years. For example, the Higgs boson was “discovered” again in 1996 when a signal of about 4 sigma appeared at the Large Electron-positron Collider (LEP) at CERN – and then vanished again. Also in 1996, quarks’ infrastructures were found in about 3 sigma. They have also disappeared. In 2003, signs of supersymmetry (a common extension of the Standard Model) were observed in LEP, also at about 3 sigma. But soon they were gone. In 2015, we saw a two-photon anomaly in the LHC, which remained at about 4 sigma for a while before disappearing again. There were even some amazing discoveries of 6 sigma that disappeared afterwards, like the “superjets” of 1998 in Tevatron (which no one really knows what they were) or the pentachoarks that were spotted at HERA in 2004 – the five-quarks were actually discovered Only in 2015.

This gives us a good basis for judging how serious 4.2 sigma is. But of course the g-2 anomaly could say in their favor that it did not weaken, but rather became stronger.

“Total coffee aficionado. Travel buff. Music ninja. Bacon nerd. Beeraholic.”

More Stories

Researchers detect extremely high-energy gamma rays

Anxiety disorders in old age increase the risk of dementia

Researchers are particularly fascinated by these exoplanets.